Assistive and autonomous systems (vehicles, work machines, aircraft) require a range of basic technology components to perform their tasks reliably and safely. One of them is robust awareness of the surroundings, which is essential for all kinds of driving and working scenarios to detect passable areas and avoid collisions. This requires multimodal sensors, as well as data analysis methods such as image processing or machine learning. Another one is determining the location on a given map – based on various positioning data from satellites or visual locality determination. Map data, however, are often unavailable or incomplete.

For this reason, we have for several years been researching and developing sensors and methods for safe recognition of surroundings, locality determination and mapping for assistance systems and autonomous systems in various areas of application.

Perception of surroundings

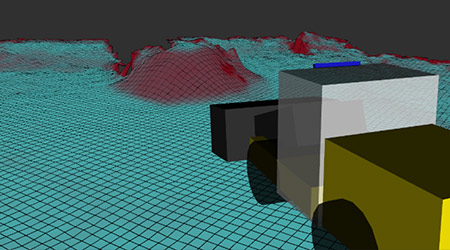

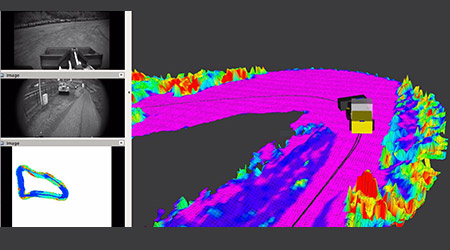

The perception of surroundings is based on multimodal sensor data from cameras (RGB, IR, multispectral), laser scanners, radars, time-of-flight, or ultrasound sensors. We research and develop data processing, analysis and fusion methods to derive suitable information for tasks such as collision avoidance, object classification, locality determination / orientation, and tracking, as well as scene understanding on a higher level of abstraction. Locality determination, navigation, and correct handling of objects by machines (e.g. crane or forklift) requires not only 2D but also 3D modeling of the surroundings based on point clouds provided by laser scanners, stereo camera systems, or radar sensors. Harsh outdoor conditions – heavy rain, fog, dirt or dust present a significant challenge. Such cases are beyond the scope of light-based sensors. Therefore, alternative physical modalities such as radar are needed to maintain essential functions of perception. Each sensor modality has its strengths and weaknesses; and to compensate for the weaknesses, it is necessary to merge data.

Locality Determination & Mapping

Knowing the exact position and orientation on a reference map of the environment is an essential requirement both for many assistance systems and for the reliable operation of automated vehicles or machines. Satellite navigation systems (GNSS) often provide information on this. Their accuracy can be increased by means of correction signals from ground-supported reference stations. As a result of satellite masking, especially in urban areas, mountain regions or on company premises, GNSS alone is not reliable and must be supplemented or replaced by alternative methods such as SLAM (simultaneous localization and mapping) or local radio-based systems.

Specific navigation and working scenarios of vehicles and machines, e.g., when these are supposed to handle objects, require detailed maps with a semantic segmentation of the content, as well as dynamic updating to capture the scenarios correctly. The maps can be created and adjusted by sensors such as cameras, laser scanners or imaging radar sensors that are attached to machines, vehicles, or infrastructure facilities on the site.

We research and develop methods for locality determination and mapping based on camera, laser, or radar data (e.g. SLAM), and we evaluate modern systems (e.g. GNSS RTK systems).

Machine & Deep

Learning

In addition to the "traditional" sensor data analysis such as computer vision, machine learning is essential for a proper perception of the surroundings. In recent years, we have therefore researched methods of object classification, semantic environment segmentation, tracking and 3D pose estimation, trained networks and built the necessary computing infrastructure.

We are also investigating Artificial Intelligence methods for handling different types of objects and for (partially) automated machines that learn through interaction with humans. For a three-dimensional reconstruction of the environment, for movement planning, and for navigation tasks, we research and use machine learning approaches such as self-supervised and reinforcement learning. In addition, we develop tools to annotate data and train networks for large sensor data sets (e.g. image databases) in an efficient way. We now have extensive datasets for aviation, transport, construction, and farming scenarios.

Examples of the use of the technology components that we have developed and described are:

- Assistance systems for trams

- Automated loading and transport processes in logistics (forklifts, cranes)

- Assistance systems for construction machinery

- Airspace surveillance and assistance systems for unmanned aerial vehicles

- Automated removal of weeds in organic farming

Linked Solutions

Research Groups Involved

Selected Projects

- Autility / Smarter

- Hopper

- Arctis / HARV-EST

- ODAS Straßenbahnassistenzsystem

- Tower

- Elena