Artificial intelligence (AI) is a cross-disciplinary approach to modelling and mimicking intelligence by drawing on various computational, mathematical, and logical principles. All learning by Artificial Intelligence systems is typically summarised as “machine learning”. Under this broad label, one finds a diverse set of algorithms and techniques applicable to problems across a wide range of domains. Since their original conception several decades ago,neural networks and similar model types rooted in gradient descent-style training have become highly successful, competitive members of this set thanks to the rapid increase in computational power and available data over the last two decades.

We use machine learning to assist existing models, especially for data-driven system control and for problem-solving where no analytical solution exists. In the same vein, our work entails marrying expert domain knowledge and machine learning to build robust and highly performant solutions. Explicability and interpretability are rarely trivial for these models, and we equip our partners with the right tools to comprehend and reproduce the decision-making for the final models. We specialise in advising our partners on the vide variety of different branches of machine learning, and finding custom solutions to their specific problems.

Data Management &

Analysis

Big amounts of data play a central role in machine learning. The data to be used to parametrise or build a model are oftentimes not readily available and need to be collected first. The process of determining experiments for doing so is known as design of experiments (DoE). We develop and improve algorithms for DoE tailored to specific fields of application by exploiting existing domain knowledge and accounting for their peculiarities. In addition, data may contain all kinds of errors. Some easy-to-detect errors are identified and eliminated during data cleaning or pre-processing. Other errors are more resistant to detection and can only be uncovered using statistical data analysis, e.g. variance and correlation analysis. The aforementioned methods are part of our feature selection and feature engineering pipelines which facilitate reduced training effort and model complexity whilst simultaneously improving model accuracy with the right subset of input parameters. Furthermore, we categorise the individual problem and work together with our partners to find a perfectly adapted solution approach for our clients, which we then implement.

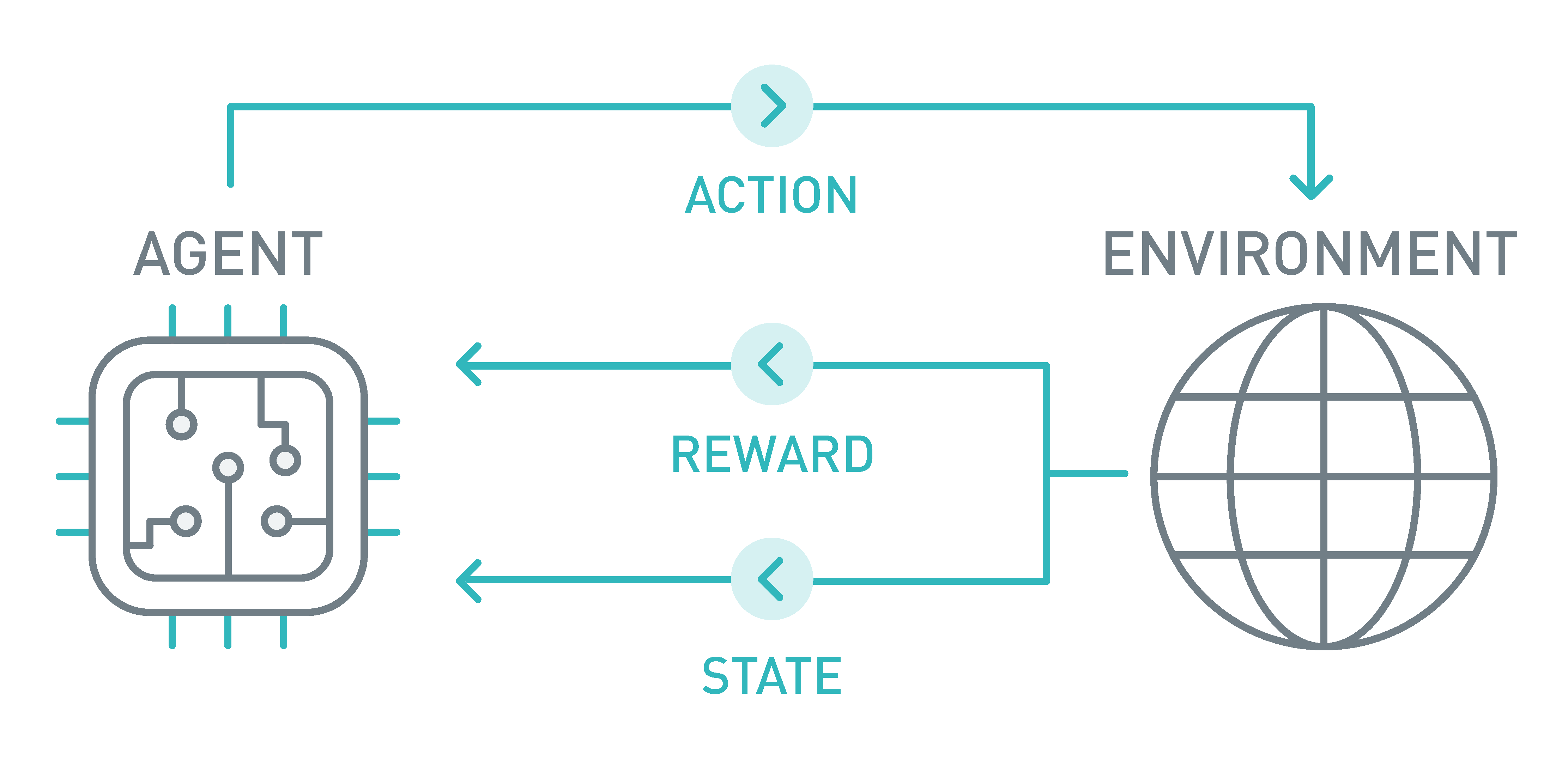

Reinforcement Learning & Approximate Dynamic Programming

In theory, multistage decision problems can be solved by dynamic programming (DP), a method for determining optimal control solutions using Bellman’s principle. Unfortunately, their exact solution is typically computationally intractable. We address and overcome this hurdle using reinforcement learning and approximate dynamic programming. Both produce suboptimal policies/control inputs with, however, sufficiently adequate performance. We offer different solution strategies which vary in their approach to modelling, approximation and optimisation, depending on the individual problem setup: Firstly, in model-free reinforcement learning, an agent/controller learns optimal behaviour by taking actions/inputs and receiving rewards/costs. Secondly, in a stochastic setting, a Markov Decision Process (MDP) can be used to model a discrete-time stochastic control process for situations with partly random, partly controlled outcomes. Typically, the so-called value function and policy are approximated by neural networks, while the weights are determined using Q- and Temporal Difference Learning. Finally, in a deterministic setting, we can employ model-based finite-horizon reinforcement learning, more commonly referred to as Model Predictive Control (MPC).

Machine Learning for Regression, Classification & Clustering

We provide our partners with custom solutions powered by machine learning. Within this framework, three distinct approaches of pertinent interest have emerged, each fitting a different problem category:

- Supervised learning is applied to problems which have knowledge of “correct answers”. Suitable models include symbolic (genetic programming), kernel (Gaussian process regression, SVMs, SVRs), and tree-based methods (random forest, gradient-boosted trees), smoothing splines (GAMs), and (deep) neural networks, the latter suitable for a wide array of problems such as regression, online learning, image classification, and time series forecasting.

- Semi- and self-supervised learning forces models to learn embeddings on labelled data. Successful examples include vision transformers and graph neural networks (geometric cue learning, deep geometric learning, knowledge graphs).

- Unsupervised learning tackles problems like clustering into unknown classes (K-means, SVC) and dimensionality reduction (PCA, LDA).

Selecting and training the right model for the right task allows us to solve problems such as static or dynamic regression, predictive maintenance, and outlier or anomaly detection.

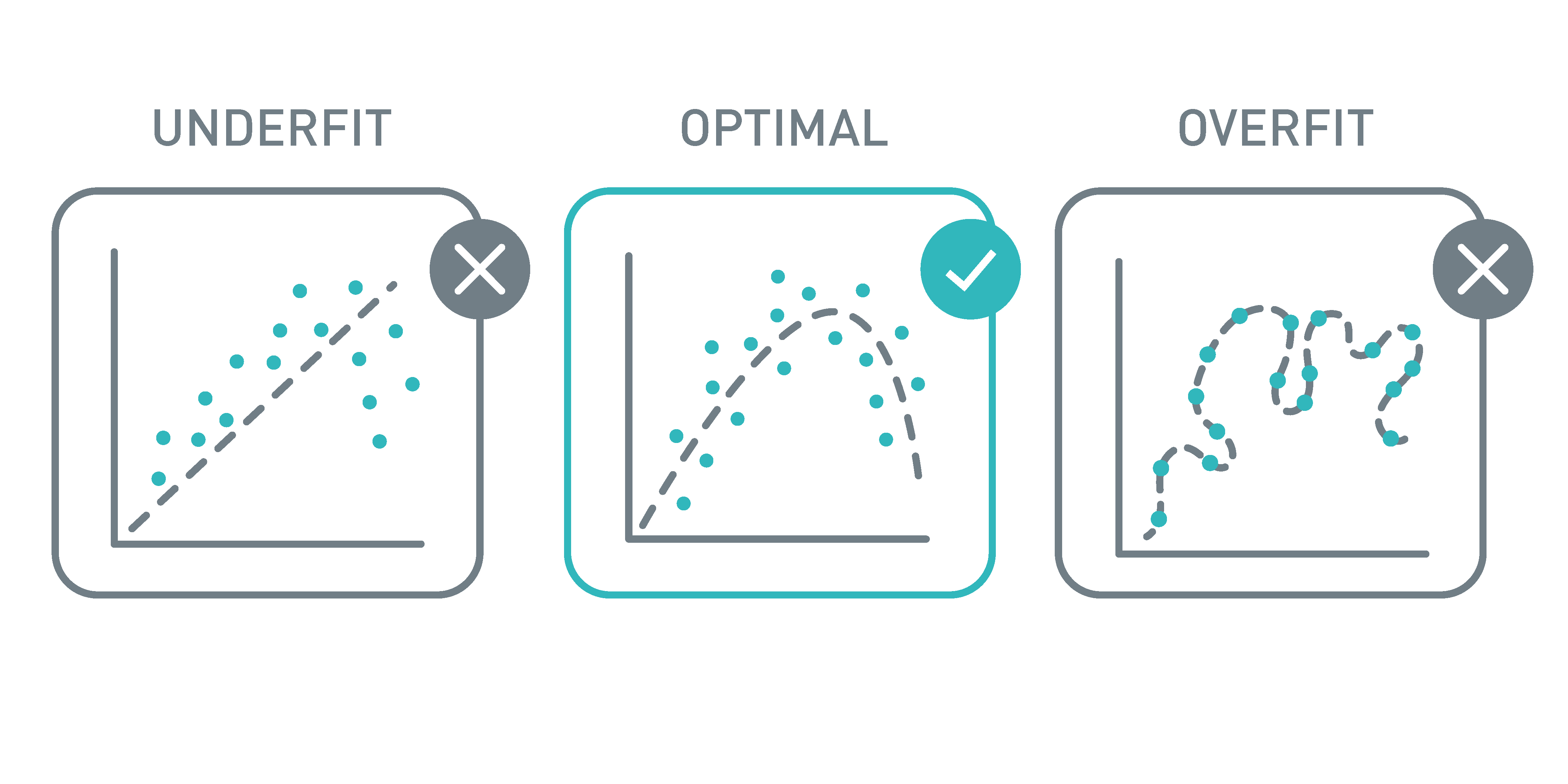

Model Selection &

Assessment

The generalisation performance of a learning method refers to its predictive capability on independent test data. Assessing this performance is extremely important in practice, as it guides our choice of learning method or model and provides a metric for the quality of the final model. In model selection, we compare the performance of different models using different (often customised) information criteria to select the best one. In model assessment, after selecting a final model, we estimate its prediction error on new data. To balance the bias-variance trade-off, we use model selection approaches that make use of subset selection, bootstrapping, boosting, and shrinkage methods such as Ridge, Bridge, Lasso and Dropout. In doing so, we enhance both the prediction accuracy and the interpretability. Additional analysis tools such as Shapley Additive Explainers can enhance model explicability and offer new insights into the underlying process.

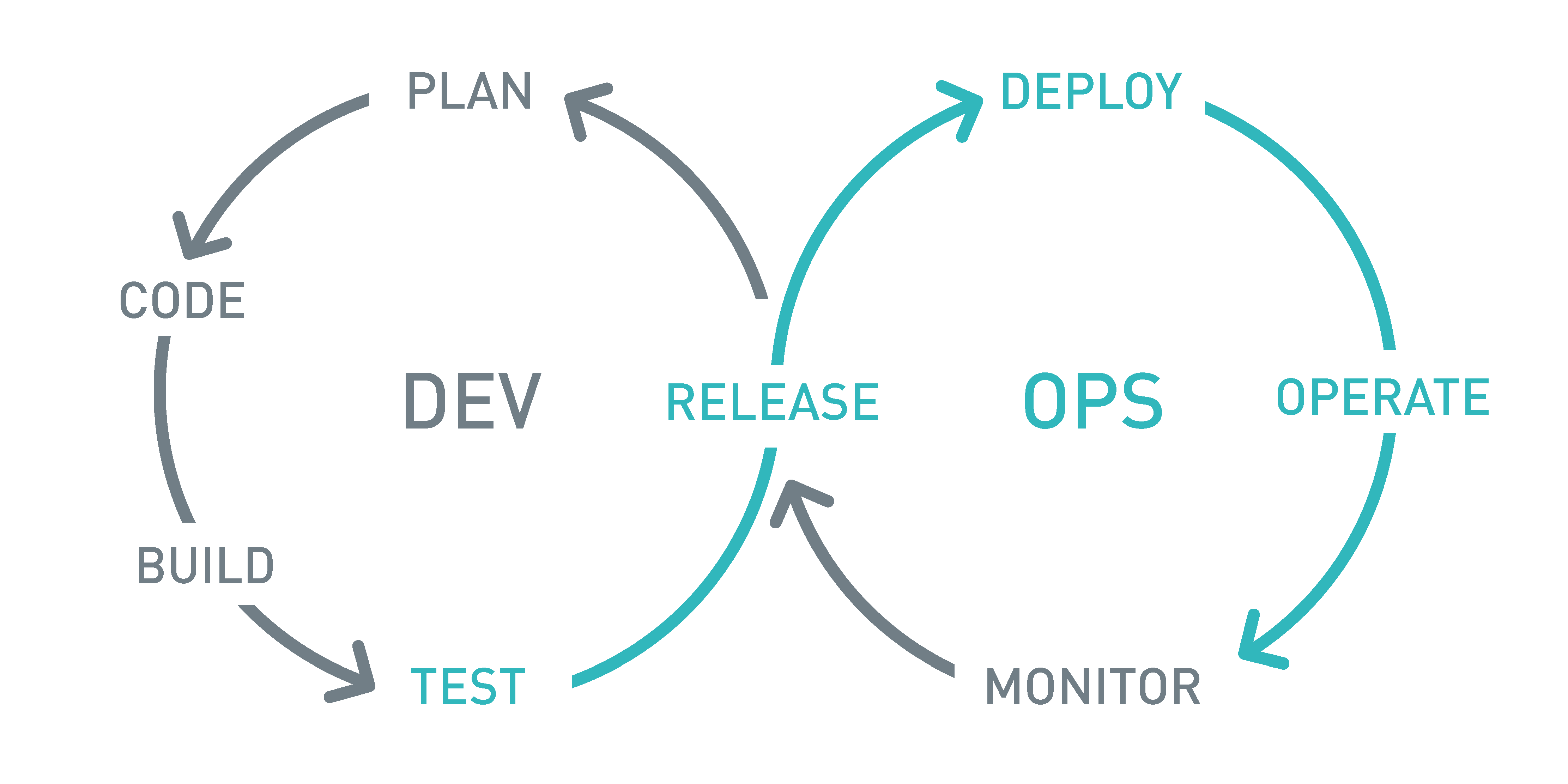

Model Deployment

Prior to deploying a successfully trained model, prediction times, accuracy and ease of use can often be improved. Depending on the individual problem, we offer transfer learning to transfer parts of a well-trained model to a more complicated model for potentially superior accuracy. Additional training at low learning rates can improve final performance of the composite model. Many machine learning frameworks have unique structures – interoperability frameworks like ONNX allow us to convert models to a standard format and deploy them in different programming languages, depending on our partners’ needs, while simultaneously optimising inference times. For some projects, online model (re-)training is necessary (upon introduction of new features or classes) and often advantageous (upon availability of additional data or concept drift) and can be automated for added convenience.

Linked Solutions

Research Groups Involved