Austrian Minister of the Interior Gerhard Karner also visited the AIT to learn about new media forensic tools in the context of the national Deepfake action plan (Image credit: BMI/Karl Schober)

In the research project defalsif-AI (Detection of False Information by means of Artificial Intelligence), which is funded by the KIRAS security research programme of the Austrian ministry BMLRT and coordinated by the AIT Austrian Institute of Technology in the Center for Digital Safety & Security, a media forensic software platform is being developed that will allow users, e.g. media companies or public administration institutions, to assess the credibility of text, image, video or audio material on the Internet. Artificial intelligence (AI) is used for this. The project focuses in particular on politically motivated disinformation. This ultimately weakens or threatens democracy and public trust in political and state institutions.

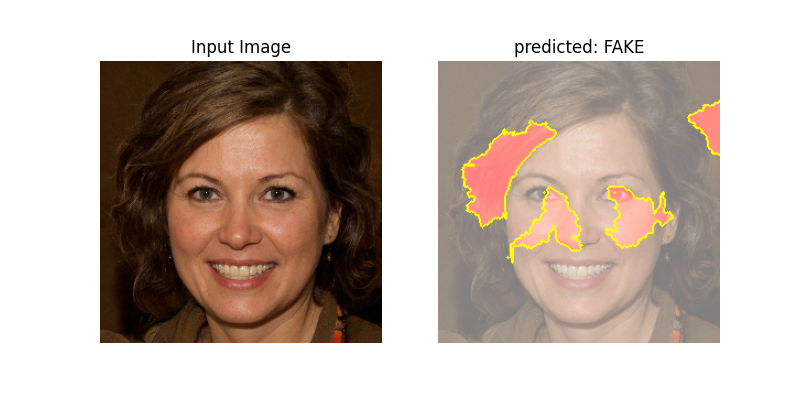

"We are developing a tool that enables media users to recognise disinformation," says AIT project manager and senior research engineer Martin Boyer. Manipulating videos, photos and texts is no longer the job of intelligence professionals. Theoretically, everyone has the tools on their computer and instructions are easy to find on the Internet. Even the production of so-called deep fakes, i.e. videos in which people - mainly politicians - are deceptively made to say things they never said, is no longer difficult. And in contrast to conventional media such as newspapers or radio, whose content goes through a verification process, such content can be disseminated online very quickly with a single click.

The new AIT tool is intended to provide assistance in recognising such fake content at an early stage. If a user gets suspicious, the software gives a corresponding assessment after it has been applied to the respective media content. Various analysis modules and algorithms of the AI then check the content. Based on this, the tool estimates the probability of it being fake news. The tool provides support, but the final decision as to whether content is to be classified as manipulated or not is ultimately made by the human being.

The Austrian Broadcasting Corporation (ORF) reports on this in the current report (video, German): Getäuschte Öffentlichkeit

- Further information on the project: https://www.defalsifai.at/

- Further information on KIRAS: https://www.kiras.at/

This image is an identity created by artificial intelligence, a person who does not exist in reality. The media forensic software developed at AIT detects the fake. (Credit: AIT)